iefan 🕊️

iefan 🕊️

I explore & build stuff on ℕostr | Certified in Cloud, Code & CyberSec | I spread #Positivity & discuss things/ideas.

Try my Nostr projects:

AppCore: w3.do/R2SlyCby

Nostr backup: nostrsync.vercel.app

Nostr dashboard: webcore.live

LN Paywall: zpay.live

iefan 🕊️

4/22 10:05:40

💕

So glad to see you have the tools now to turn your ideas into reality. I think this trend will completely revolutionize computer science. Please keep building.

⬆

⬆

Ryan

4/22 9:57:06

💕

This site is all Karnage. nostr:nprofile1qqsfeg9aw3g8gtt2yqcecr3af3nee8syd2wuwr5w74wzjp0zgpfrgzcpz4mhxue69uhhyetvv9ujuerpd46hxtnfduhszrnhwden5te0dehhxtnvdakz7qg3waehxw309ahx7um5wgh8w6twv5hsler5rm started using the hashtag a while back, and I loved the idea and joined in.

Thankfully we use a common format for the notes we post, I think that made it pretty easy to parse. The site looks good 💯

⬆

2e5c74e064e65ca66b64f38bcae8314477b5ff544674599594973d8fcb6a29bd

⬆

0a447067503cc444e6c9234c9f1ab4cbc9635ef503a859aa3763f76f2498f176

⬆

Karnage

4/22 9:28:44

💕🤙

https://kinostr.com/ is born.

Though, if you upload anything that’s illegal it’ll likely get taken down fast

To add films you need to include the #kinostr hashtag and preferably the cover image for the film as well as video link.

A better way would be to allow streaming of torrents. This is more of a vibe hack proof of concept.

Enjoy!

iefan 🕊️

2/27 16:19:25

💕

i think the next decade is going to be very interesting, not just for computer science, but for business and other forms of engineering.

i don’t think many people truly realize that these models/agents might make the entire concept of a workforce irrelevant, especially in technical fields.

someone with a deeper understanding, who can be more specific with these models, might outcompete 100% of casual workers alone. people & companies are not ready for it.

⬆

⬆

iefan 🕊️

2/27 15:51:01

💕🤙

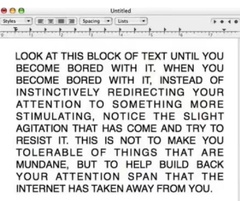

Should you still learn coding even if AI automates it all in the next decade? Absolutely. Think about it, people still learned C even if they never built anything with it. Why? Because it gave them a deep understanding of computers, how they think, how they process data, how memory works. Without that foundation, the code AI spits out will just feel like magic. You won’t know if it’s right, if it’s efficient, or even how to fix it when it breaks.

In fact, this might be the best time to learn computer science. If you know what you’re doing, you could soon be running hundreds of AI agents, automating work exactly how you want. You’ll be the one calling the shots, not just relying on whatever AI gives you. With the right skills, you could outcompete entire companies—right from your own room. And if you’re paying attention, you already know this is coming.

iefan 🕊️

2/25 8:54:14

💕

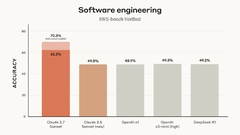

it's the best model for coding & math stuff. nothing else even comes close!

⬆

⬆

iefan 🕊️

2/25 8:24:25

💕

this is a serious violation of people’s basic right to privacy. they’re using these spywares to monitor us 24/7, tracking our every activity. this future is unacceptable. nostr:note1e0ga8cf7cy78q0hqr3dzztrjz7hchtcug7cqnepd26k4e6kcnqrq5lserj

iefan 🕊️

2/25 8:13:50

💕

Google secretly installed this app on various Android devices without users’ permission.

Reports suggest that it can scan through your photo gallery and takes up 2GB of storage.

You might want to check your phones; you can just uninstall it from the Play Store: https://play.google.com/store/apps/details?id=com.google.android.safetycore

iefan 🕊️

2/23 22:34:19

💕

Typically, setting something like this up involves building your own RAG system, a database and powerful servers, essentially retraining the model.

But with this massive 2 million token context window, my implementation skips all that. You essentially fine-tune the model by talking to it. This conversation can include text, media, PDFs, and instructions, you can upload all this data & instructions in bulk, & keep modifying it.

Once the model acts the way you want and has the knowledge you need, you just save that state as a personality, it saves it locally.

Then, the next time you open it, you start right where you left off. And you can reuse that state whenever you want, as many times as you want.

This approach is much more accessible. Building and maintaining a RAG system isn't easy. Also, the model much more readily understands the context in this method compared to traditional RAG. The reason we don't use context more widely for model training is that we just haven't had enough context window. Only Google's modals has it at this scale, for now.

⬆

Karnage

2/23 22:09:02

💕

So like having a project folder to add data? I know perplexity allows that, and ChatGPT does too, but I don’t know what token limits that has. 2m is good - it’s a decent conversation size!

⬆

iefan 🕊️

2/23 22:05:49

💕

Their models don't have Gemini's 2 million token context window, & my implementation gives the model its literal system prompt for instructions.

This approach differs technically. Instead of using a remote database & powerful computers for a RAG setup, it builds personalities from chat context saved locally for each personality.

Think of each personality as a kind of half-conversation. You give the model instructions, data, and fine-tuning for a specific task. Then, you save that state. When you want to do that task again, you just load the saved personality.

⬆

⬆

iefan 🕊️

2/23 18:47:41

💕🤙

One interesting aspect of these LLMs is their general-purpose nature. eg, a model proficient in coding is likely also adept at writing or understanding data.

Other factors, such as context window, also play a role. The model I'm using has a 2-million-token context window, roughly equivalent to 20-30 books per personality.

Consequently, you don't need 10 different AI app to handle 10 different tasks. A single AI app, with its behavior modified, can handle all of them, often with a single model.

nostr:note10c5gr8jfakse9tdx6fnzatg57sfzwqy63jq0y298g3apylnjlk2q4vwjx5

iefan 🕊️

2/8 19:32:12

💕

I really hope he finds the help he needs. I went through his feed, and it's really disturbing.

⬆

⬆

iefan 🕊️

2/7 15:08:19

💕🤙

As much as I like Claude, it’s becoming increasingly clear that not only have they built one of the most heavily censored AIs, but they’re also developing censorship systems for other models.

Its CEO has essentially become the poster boy for AI censorship. I really don’t want to support it anymore.

iefan 🕊️

10/31 10:07:03

💕

Gm, 🫡

⬆

The Fishcake (nostr.build)

10/31 9:58:20

💕 :nospuppu: 🤗 🤙

GM!

Just released a few minor fixes and optimizations for the nostr.build:

- if uploaded GIF does not achieve at least 5% size reduction through optimizations and resizing, it is left as is.

- We have had support for no_transform flag for the nip96 API, and now account page allows you to enable it for the current session in the account web app.

🫡☕️☕️☕️

iefan 🕊️

9/11 10:26:48

💕

Useful Nostr Tool: w3.do, Link shortener, it uses nostr relays. It works, I use it all the time.

Build by a awesome dev nostr:nprofile1qqswlsm7jlayltt8neryk7npssqfklx8vpdvavx97445vnftnp4xpuqpr9mhxue69uhhyetvv9ujumn0wdmksetjv5hxxmmd9uzax4ze

Link: https://w3.do